First, a slightly broader definition: In principle, variant configuration (VC) can be described as the process of developing, manufacturing and maintaining several versions of a product to meet different customer requirements. This is important because it helps companies manage product complexity and variability while simultaneously meeting different customer and market requirements. This method plays a central role in various industries, including the manufacture of drilling rigs, giant rock excavators and related equipment, but also generally for example in the automotive and heavy machinery industries, and even in software development. One point of VC is to tailor products for specific needs and wishes without dramatically changing the entire production process.

The importance of the digital thread

A particularly interesting aspect of what John Nydahl has to say concerns as mentioned above the digital thread, which plays a supporting role in the setup Epiroc is planning for, together with digital twins, simulation solutions and IoT-connected sensors for feedback of data about the rigs in active service in the field. There is more, but it boils down to the fact that Nydahl and his team have a holistic approach, which is not unimportant in the context.

But what exactly is the digital thread? A sharp definition has been made by PLM analyst CIMdata’s chief, Peter Bilello:

”Essentially, the digital thread is a map of decision nodes, which looks like a kind of spider web,” he says. ”For some, such a network is actually a more realistic representation of how data and processes are interconnected in companies during their products’ life cycles. These webs conceptually need to connect hundreds, thousands and perhaps millions of information nodes, data storages and repositories, and that is where the value is expected to be found.”

Challenging? Absolutely, but properly laid out, implemented, and equipped with relevant digital tools and processes, Bilello says it can do great things in terms of productivity, quality, cost development, time to market and other things that can accompany highly automated and AI-controlled industrial processes.

Far from just a matter of technology

Important to remember in this context is that when we talk about AI and technological advances, it is ultimately not just a technology issue. Just as much, perhaps even more, this also concerns economic progress that can accompany increased process efficiency, higher product quality and which has the potential to create both a stronger competitive and financial position for the company. Lars Nydahl and Lena Gunnarsson, from PLM Experience Day’s organizing staff, summarized these tangential aspects as follows:

”The fact that a product and its variant possibilities have different approaches and requirements depending on which domain you are in has of course its complications. It is about several things: the R&D angle, customer requirements, regulatory restrictions, manufacturing possibilities, serviceability and sales to name a few factors with a big impact on the structure of the arrangement. The requirements and contact points must work despite being in often disparate IT and business landscapes. If you succeed in this, there are huge lead time gains and big money to save,” says Nydahl.

”But even if, for example, product and sales configurators are not one and the same system, or have exactly the same modeling, they need to be able to work together,” adds Lena Gunnarsson. ”The experiences from the event also show that things like history, for example the green or brown field aspects, are of decisive importance for where you start a project and how it then develops. Most of the time you have to start digging right where you are and then gradually expand and expand the whole thing.”

An exciting vision

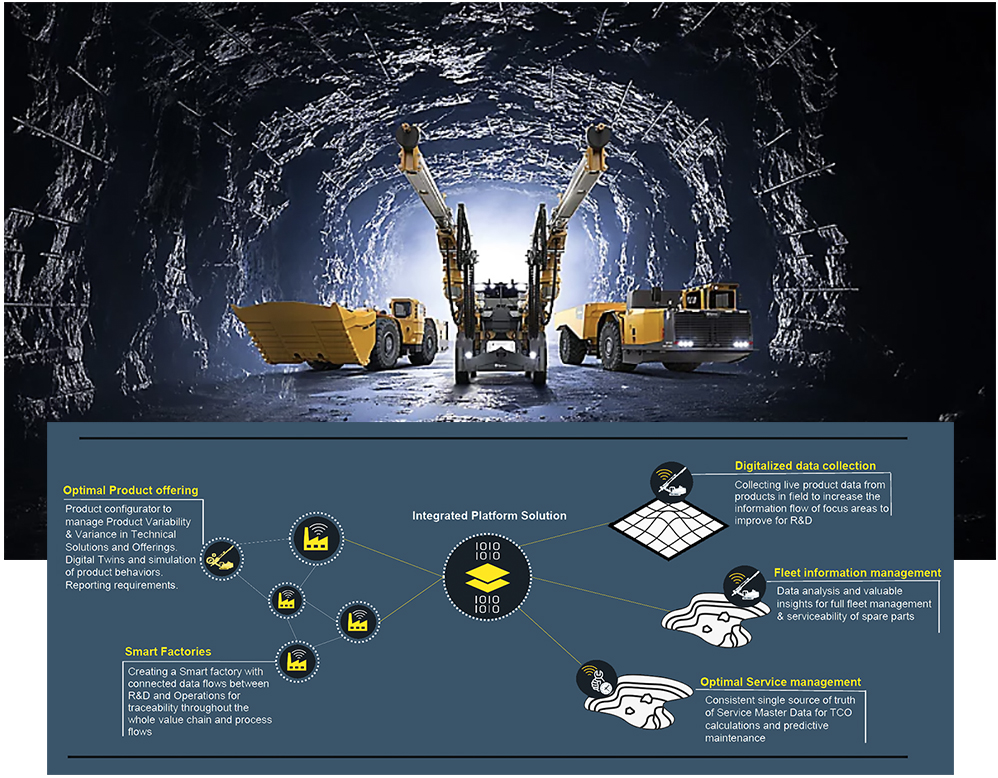

Back to Epiroc and John Nydahl, it can be noted that by all standards the company is a major global player in the mining and infrastructure industries, including a range of both direct (drilling rigs and rock excavators) and complementary products (e.g. equipment) for both surface and underground-based mining operations, road construction, tunnels and the like. In this, creating a platform for variant configuration on a local and smaller scale is difficult enough, managing the matter on a global scale is a guaranteed even more complex challenge for any organization.

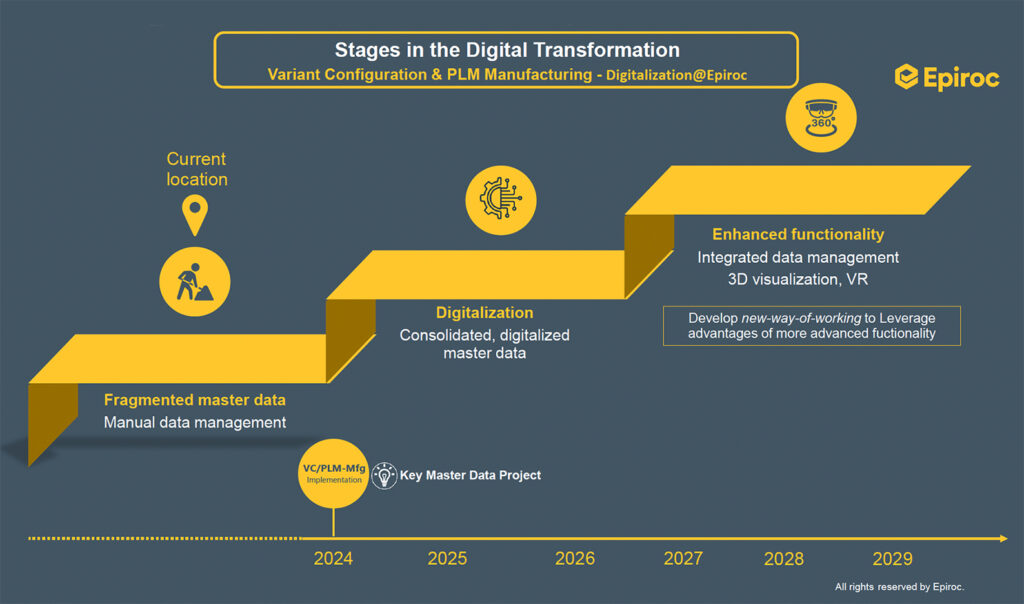

But Epiroc has an exciting vision to drive the modular composition and set up of this platform forward according to a three-year step-by-step plan starting in 2024. How John Nydahl and his team envision the future can be seen in the picture below, where it is evident, among other things, that a crucial basic goal is to build an integrated common platform for the entire organization that includes not only virtual configuration management (VC), but also about building a Product Master Data platform, not least to get a single consolidated source of truth.

This is what the VISION looks like:

PTC Creo, Siemens Teamcenter and Infor M3

What Eproc in terms of digital tools have to start from is a variable digital process setup based on PTC’s CAD solution Creo, as the primary design solution and the place where the eBOM is created (engineering Bill of Material). This in turn is connected to Siemens Digital Industries Software’s PLM/cPDm platform (collaborative Product Definition management, ”PDM”) Teamcenter as database and digital data backbone.

The system also includes an ERP solution from Infor, the classic M3 software, and they also work with Microsoft’s Sharepoint and Excel.

Overall, a diversified and purely in principle not in any way an unusual combination for industrial IT and PLM support. It also has the not unusual problem that it requires manual efforts in the transaction exchange between the systems, which in turn is connected via an apparatus set up built up gradually over a longer period of time.

”To keep this together, we have established a very large aftermarket area, with maintenance plans and other things that are required to keep a business running where our machines, just to give a perspective, in, for example, an open pit mine, run 24 hours a day. Every day all year round. And so, there is a great need to maintain and service the machines continuously,” comments Epiroc’s John Nydahl.

The disadvantages of manually managing product configuration

He also points out several disadvantages of working with manual management of the product configuration and product data.

”We even call it pain points, where a fundamental problem is the lack of a common digital platform that can consolidate product master data. The effects of this are several,” he says, noting the following points where the problem relates to the use of manual data management via multiple systems between R&D, Operations, Sales and Service:

- It contributes to poor lead time development: Sales, R&D and operations are not adapted to available functions

- Creates high labor intensity: In short, each MO is manually configured for manufacturing

- High error rate: The risks of introducing errors when transferring data manually are high

- Lowers productivity: Reduced system support and few standardized formats reduce speed and efficiency

- Drives cost with sub-optimization, errors and misses

The solution to keep the digital thread together

So, what does the journey look like and what do Nydahl and his team want with their plans? The vision is, as the picture above shows, comprehensive, notes John Nydahl (pictured left):

”What we see is the need for an integrated platform solution that can hold together the digital thread with everything from the optimal product, the design in the R&D department and a product configurator, to digital twins and representations of our products with the capacity to simulate product behaviors. Integrated to this, we also see smart factories, with linked information flows between the R&D department and the Operational business. Furthermore, IoT sensors must be connected and collect data on how we build the machines, how they work in the field and also how we can move factories around the world in sustainable ways.”

All this is also supposed to be connected to the aftermarket part; i.e. how to digitize data management, feed data back to the R&D department and how to simultaneously keep track of Epiroc’s fleet of rigs, how it is serviced, how to optimize its presence on site and be able to live up to service contracts and other emerging customer needs. All underpinned by a database to manage the flow of information, where a point is that the same product data must apply everywhere. It is a wise principle and the cornerstone is a singular source (database) of the truth, as it is usually called.

To realize the vision

This given – where is Epiroc now?

”We are a good way on our path. We are good at many systems, but we see that in our digitization ‘staircase’, we are still some way from being able to put data together in a consolidated flow. We are good at working with data within the respective systems, but in order to arrive at a well-functioning holistic integrated function, we must digitize the management, format, connect and consolidate the data into a master data platform. Today, the systems do not go together, but data must be moved manually between them. There are, for example, elements of Excel and other ways of process handling that disturbs smooth data flows.”

He also notes that a lot of what should and needs to be digitized exists as experientially based knowledge with key individuals, ”it so to speak sits in the walls and with people who know this and understand how it works.” This needs to enter the digitized systems.

The same applies to fragmented data, such as those in non-standard formats, randomly archived or coming from foreign systems.

When you then move on towards an unbroken digital thread built on a platform with configured product master data in the PLM system, John Nydahl points out six steps – what are they and how are they connected?

A new way of working

“So, the first step in this is to digitize this, format and consolidate the data; we make it templated to show, ’this is how it should look,’ connecting the capabilities for configuration and also the technical solutions,” says Epiroc’s digitization leader.

This is what steps 1 and 2 look like in the 6-stepped new way of working with digitized and consolidated master data in the PLM system. Moving on, Nydahl talks about four inter-communicating components under the Product Master Data umbrella:

- Systems definition

Here it is about product architectures and logical, physical and system architectures: the product feature database, automated configuration and module management - Design

The geometric representations: Design of the technical solutions (eBOM from the CAD system), management of Positional Variance, full product visualization (which can be further connected to a Virtual Reality environment) to, for example, be able to do design reviews digitally or to be able to show to customers how something works - Assembly

Manufacturing architectures: mBOM (manufacturing BOM, Bill of Material, built on the eBOM and transferred to the ERP solution), BOP (Bill of Process, from the MES system), EWI or digital work instructions - Service

The service architectures: sBOM (Service BOM built on product data, produced in the EAM system, Enterprise Asset Management), spare parts catalog and service plans.

”In short, this is the basis of our digital thread,” sums up Nydahl. ”The path forward, the whole way from R&D to service is lined up, but we are not there yet. The sales part is missing, for example, in this picture, i.e. how members in the sales team can configure solutions together with the customers. But on the other hand, much of what is needed in relation to the customer and what they want is in the service pieces with maintenance plans, service plans, availability, etc.”

He also notes with regard to the software components that build the platform that they are upgraded where needed: For example with additional VC capabilities in PTC’s Creo CAD and the same for the additional pieces needed in the PLM data backbone, Siemens Teamcenter.

The implementation of the 3-step staircase

This is thus what Nydahl and the team wants and aims to implement according to the three-step ladder. How then?

”We have run a pilot jointly across two divisions, the R&D and Service parts. In this, we have worked extremely hard to first set up a configuration for product architecture of the machine – how do we represent it in the best way and how do we format the data so that it works downstream. Then we looked at the processes: How do they look today, how do they work with all the diversified and manual elements, and then ’translate’ this into the more complex and ’square’ needs that a system needs to be able to handle these pieces.”

What has been done is the job of moving this into the system and either adapting the processes for the system or, where relevant, the other way around. This is a complex process that requires a lot of testing to fine tune and create smooth working flows.

”In this, we have looked at how the processes work in the system: Do the processes need to be changed or does the system need to be adapted?” summarizes John Nydahl.

These are the first steps in the ”Key Master Data project” built on Epiroc’s three-step ladder based on:

1. Present state 2024

2. Digitization, consolidation of master data during 2025-2026

3. Improved functionality with integrated data management, 3D visualization, VR and implemented new ways of working to reach new advanced functionalities from 2026-2027 and beyond:

The organizational pieces primarily involved in the program are:

- R&D: regarding

Product architectures and variant management

Digitized product master data - Operations: regarding

Digital manufacturing

Process management - Service and Aftermarket: regarding

Digitized service master data

Digitized spare parts catalogue

A tough journey, but the potential gains are great

In his summary, John Nydahl states that from the start they were very theoretically oriented, the obstacles to starting a project of this magnitude are many and there are intractable question marks and objections. But eventually you get to a point where you need to kick in to get around them.

”Certainly, we find problems here and there, processes that don’t work as they are, shortcuts that don’t work in logical sequences, or – which is also discovered when you start to examine things more closely: There are errors in, or missing, data. Etc. But we are actionable and want to achieve results and so we analyse, change and push on. Let me also stress the importance of dealing with the particular aspect of data quality. It’s extremely important: Correct data in – correct results out.”

This is a conclusion very much in line with the latest findings in PLM. Nobody with the slightest knowledge of the complexity involved in modern industrial processes, PLM and AI ever claimed that this is easy. It’ no walk in the park to transform a global organization. Junk in, junk out, is roughly speaking one of the eternal truths in PLM and advanced process management.

Today, Nydahl further explained, higher demands are placed on things that previously could flow through the system without any major consequences that could not be fixed. But within the framework of a digital thread, a consolidated PLM and master data platform, the demands on, among other things, data quality are higher.

”Exactly, but when things end up where they’re supposed to, and how they’re supposed to be effective, the potential gains with the system are extremely large. Already today and at this early stage, we have seen, for example, in R&D that we actually get a shorter time to market (TTM). We have gained a better picture of what our technical solutions really look like and can gain lead time in reusing things that already exist. Also, on the assembly and service side, we see very good improvements related to what has been implemented, as well as, for example, time to competence for the technicians in the field. The documents are there, they become more easily accessible and they are generally more accurate than before because they are based on the same data that was used in design work,” concludes Johan Nydahl.

Bottom line, it is a tough challenge that the Epiroc team has taken on. Carrying out this from a blank sheet where you start from a so-called ”green field” is difficult enough; to do it based on a ”brown field”, an existing organization, with different development centers product development facilities, software sets and on a global scale is even more difficult. This is a journey that requires you to be armed to the teeth with the necessary digital tools, patience and readiness to establish new smart processes.

But with the right persistence, top management backing, human and financial resources, software set-up and why not AI, there are very large profits to be made.